Abstract

Many HIV prevalence surveys are plagued by the problem that a sizeable number of surveyed individuals do not consent to contribute blood samples for testing. One can ignore this problem, as is often done, but the resultant bias can be of sufficient magnitude to invalidate the results of the survey, especially if the number of non-responders is high and the reason for refusing to participate is related to the individual’s HIV status. One reason for refusing to participate may be for reasons of privacy. For those individuals, we suggest offering the option of being tested in a pool. This form of testing is less certain than individual testing, but, if it convinces more people to submit to testing, it should reduce the potential for bias and give a cleaner answer to the question of prevalence. This paper explores the logistics of implementing a combined individual and pooled testing approach and evaluates the analytical advantages to such a combined testing strategy. We quantify improvements in a prevalence estimator based on this combined testing strategy, relative to an individual testing only approach and a pooled testing only approach. Minimizing non-response is key for reducing bias, and, if pooled testing assuages privacy concerns, offering a pooled testing strategy has the potential to substantially improve HIV prevalence estimates.

Similar content being viewed by others

Introduction

Hiv prevalence estimates derived from national population-based surveys are often considered the gold standard of hiv prevalence estimation when non-response rates are low [1–4]. However, finding and obtaining a blood sample from all individuals surveyed is a considerable, if not almost impossible, challenge. Frequently, migrant or homeless populations are ignored and a large proportion of the sample does not consent to being tested, potentially inducing (unmeasured) bias in the hiv prevalence estimators [4].

In this paper, we discuss a method for promoting increased testing consent rates. Individual reluctance to test may be influenced by several factors, including those related to social stigma associated with hiv and difficulty in accessing treatment for some testing individuals [5, 6]. While no consensus has been reached on reasons for test refusal or failing to return for test results, fear is a common theme in such studies [7], and there is evidence that those who are aware of their positive hiv status are less likely to consent to testing [8].

Additionally, the hiv testing protocol is an important factor in gaining test consent [9]. The method of asking for consent, specifically convincing survey participants of the importance of their contribution to fighting the hiv epidemic while assuaging concerns about privacy of test results, could be key in improving test consent rates. Previous studies have assessed the impact of anonymity in testing by examining testing rate trends following transitions from anonymous to name-based reporting; there is some evidence in the literature that eliminating truly anonymous testing would impact individuals’ decisions to test hiv, though the results are not consistent (see [10, 11] and references within).

One option for estimating prevalence while preserving the nonidentifiability of individuals, at the cost of greater uncertainty, is pooled testing [12], where individual samples are combined to form pooled samples. In this paper, we propose a testing protocol that supplements the presumably more informative individual testing with pooled testing. Each sampled individual is asked to provide a blood sample for disease testing, where the investigators (and, by choice, the individual as well) learn the disease status of the individual. If the individual rejects this testing option, we ask if he will provide a non-identifiable blood sample which will be combined with other samples in a pooled test and, in which case, no one knows this individual’s test result. If the individual does not consent to pooled or individual testing, then he is not tested for the disease, of course.

Ideally, by providing the pooled testing option, the amount of missingness in the sample is substantially reduced. Pooled testing strategies are frequently used in practice [13–18], but to our knowledge, have never been discussed in the context of improving survey response rates by varying the testing options. In this paper, we propose such an estimator and study its analytical properties. First, we discuss testing consent rates in hiv prevalence estimation surveys and give examples of when non-response bias is an issue in such surveys. We then discuss how to implement a new pooled testing strategy and propose an estimator for prevalence based on this testing strategy, assuming perfect sensitivity and specificity of the test. We present results from a simulation study examining small sample properties of this estimator and illustrating the importance of pool size choice in such a survey design.

Missingness in HIV prevalence estimation surveys

Surveys designed to estimate hiv prevalence can have low testing consent rates, and test refusal is potentially associated with risk of hiv infection. Depending on what is driving test refusal in the population, missingness in a sample may induce bias in the estimator of prevalence [4]. Reviews of national hiv prevalence surveys have concluded that, while those who refuse testing may have a higher hiv prevalence, bias induced by missingness is usually negligible because response rates are on average sufficiently high [2, 3]. However, the authors make strong assumptions about missingness patterns in the survey and also reference many surveys in which response rates are low enough that it is difficult to believe that bias in prevalence estimators is negligible. For instance, the hiv testing consent rate is 62.2% in men and 68.2% in women in the most recent national South African survey [19], and consent rates are even lower in the longitudinal hiv surveillance survey in rural KwaZulu Natal, South Africa, described in [20].

A taxonomy of the types of patterns of missingness is useful for analysis [21]. When missingness is at random, survey calibration techniques (such as weight-class adjustments, poststratification, and imputation) allow for adjustment of prevalence estimators to remove bias [22]. All such methods depend on the assumption of missing at random, which states that conditional on covariates, the outcome of interest (hiv status) is independent of the missingness mechanism (test refusal). Many studies have shown that hiv test results are not missing completely at random (see [7] and references within); further, assuming missingness is at random is a strong and untestable assumption.

When asking individuals to consent to hiv testing, regardless of how much covariate information is available on these individuals, one could reasonably infer that missingness is nonignorable, is associated with disease status, and cannot be completely explained by individual characteristics. For instance, individual covariate information is likely to be unreliable or sparse when dealing with sensitive topics, such as risky sexual behavior, fidelity, or drug use [23]. Sensitive issues such as partaking in risky sexual behavior are of course associated with hiv status, and studies suggest that there are inconsistencies in reporting of sexual behavior in Demographic Health Surveys (dhs) [24, 25]. Further, using dhs data from Zambia, one recent study concluded that models based on observed covariates (i.e. assume missingness is at random) are insufficient to correct for selection bias in hiv prevalence estimation surveys, though this study relied on strong, untestable modeling assumptions [26]. Such studies reiterate that the best way to ensure unbiased prevalence estimates is through eliminating non-response.

When missingness is not at random, the (heuristically) most conservative range of estimates for hiv prevalence in a sample calculates the lower bound for prevalence by assuming that all non-responders are hiv negative and the upper bound by assuming all non-responders are hiv positive. Such plausibility bounds are obviously very wide when the proportion of non-responders is high but are also arguably the most honest bounds for our certainty regarding the sample prevalence estimates. Specifically, if only a fraction r of the sample responds to the survey, the prevalence of hiv in the sample is p=r p R +(1−r)p N , where p R is the sample prevalence in the responders and p N denote sample prevalence in the non-responders. Since we only know that p N is between 0 and 1, the lower bound for prevalence in the sample is r p R and the upper bound is r p R +(1−q). The width of this interval is 1−r, illustrating the importance of maximizing r in the presence of nonignorable missingness.

As an example, consider the 2004 dhs survey in Malawi [27]. The overall response rate for hiv testing was 70% in women and 63% in men. Of those interviewed by health workers, 22% refused hiv testing; the remainder of the non-response was driven by inability to locate sampled individuals for testing. In the Lilongwe district, the response rate was only 39%, with 49% of subjects refusing hiv testing and the rest unable to be located. The observed prevalence of hiv for the Lilongwe district was 3.7% with 95% CI [sic] (1.0%, 6.4%), whereas the observed prevalence in the rest of the country was 13.2% with 95% CI [sic] (12.3%, 14.2%). The hiv prevalence estimates for Lilongwe were deemed “implausibly low” and prevalence was imputed for everyone in the district of Lilongwe based on demographic information obtained in the household survey. The imputed prevalence for the Lilongwe district was estimated at 10.3% with 95% CI [sic] (9.3%, 11.3%).

Consider the conservative plausibility bounds mentioned above for the Lilongwe district. There were 500 individuals eligible for hiv testing in the district of Lilongwe, but only 193 of those eligible consented to hiv testing. Based on this information, we deduce that about seven out of the 193 consenters were hiv positive. If we assume all 307 non-consenters were hiv negative, a lower bound for hiv prevalence is 1.4% with 95% CI (0.4%, 2.4%); likewise, if we assume all 307 non-consenters were hiv positive, an upper bound for hiv prevalence is 62.8% with 95% CI (58.6%, 67.0%). By taking the lower confidence bound when we assume all non-responders are hiv negative and the upper confidence bound when we assume all non-responders are hiv positive, we can obtain the most conservative plausibility bounds at the 95% confidence level. In the Lilongwe case, the heuristic “plausibility bounds” for the prevalence of hiv are (0.4%, 67.0%), which now includes the national prevalence estimate for hiv in Malawi. While no one would ever present such wide plausibility bounds, these extreme bounds show the true amount of certainty we have when we know nothing about non-responders. The Lilongwe example illustrates the dangers of high non-response in an hiv prevalence estimation survey.

In many hiv prevalence surveys, non-response rates may be modest, and missing at random corrections will suffice for producing nearly unbiased HIV prevalence estimates. For instance, [3] list nonresponse rates by country and sex for DHS/AIS surveys; response rates exceeded 90% for both males and females in the Rwanda and Cambodia 2005 AIS surveys. Many other countries also retained high testing rates. However, in locations such as Malawi and South Africa, where prevalence and non-response are both high, alternative testing strategies are a viable tool for decreasing non-response and improving prevalence estimates.

Testing logistics and pool size

In standard hiv testing surveys, individuals are only asked to consent to an hiv test once. Using a pooled testing option, we offer two opportunities to consent to hiv testing. For those who select the non-identifiable pooled testing option, individual blood samples are pooled with k−1 other blood samples (k>1), and only the test result of the pool is known to anyone. We delay discussion about appropriate choice of k to below. Though we anticipate that some will still refuse both individual and pooled hiv testing, the intent is to lower missingness in the sample (and the associated inherent bias in the estimator) by including individuals who refuse individual testing but are willing to provide a sample for pooled testing. We propose a combined individual and pooled testing prevalence estimator, for which privacy is preserved but prevalence can be estimated more accurately than when using only those willing to submit to individual testing.

Many possibilities exist for adapting testing protocols to include a pooled testing option. For instance, participants could first be asked to take a rapid test and learn their status; alternatively, standard ELISA blood tests could be administered, with the option of obtaining results at a later date. For those who were not interested in either method of individual testing, the pooled testing option would be explained. A simple illustration of how pooling works might aid in understanding how the protocol works (for instance, pouring together vials of different colored water into a cup).

Preserving privacy of the pooled testers is a primary concern in our protocol. If a pool tests negative, we know the test results of individuals in the pool (negative) within the bounds of the sensitivity of the testing kit. Presumably, individuals are not as concerned with the confidentiality and identifiability of negative test results, and we are not concerned with this situation. If a pool tests positive, individual test results in the positive pool are non-identifiable for pools of size 2 or bigger. Of course, the issue of trust is important; those carrying out the survey need to convince those surveyed that their privacy requests be respected if we wish to lower the refusal rate as much as possible. Furthermore, ethical non-identifiability for positive pools may mandate larger pool sizes.

If a pool tests positive, the probability that an individual is positive is p/(1−(1−p)k) in a population with prevalence p. For instance, when the population prevalence is 20%, the probability that an individual in a positive pool is hiv positive is 1 when for pool size k=1 (individual testing), 0.56 when k=2, 0.41 when k=3, 0.34 when k=4, 0.30 when k=5, 0.27 when k=6, and 0.25 when k=7. Since the population prevalence is 20%, without testing at all, the probability a person is infected is 20%. As k increases, the probability that an individual tests positive given the pool tests positive approaches the population prevalence. Thus, as pool size and prevalence increase, we gain less additional information about the disease status of individuals in a pool when the pool tests positive.

However, using pool sizes that are too large decreases accuracy of the pooled testing estimator (we further discuss the implications of pool size below). The key idea in this confidentiality protection problem is “to balance the need for confidentiality protection with legitimate needs of data users” [28]. The United States’ Federal Commission for Statistical Methodology lays out threshold rules for identifiability of survey responses for tabular data within U.S. Agencies; generally, at least 3-5 responses per cell are required for non-identifiability, but this minimum choice of responses per cell often varies with the sensitivity of the information and potential for disclosure [29]. In order to use the pooled samples, pool size must be carefully selected by balancing the precision of the pooled estimator with the ethical restraints imposed by nondisclosure of individual test information.

In this paper, we consider pool sizes to be between 3 and 7. While a smaller pool size will always result in a better estimator, pool size must be sufficiently large to protect the confidentiality of the testers; we assume ethical limitations would never mandate having a pool size larger than 7 and use this as our maximum pool size. In settings with low prevalences and cost constraints, higher values of k would be warranted.

Framework for combining individual and pooled test results

To construct an estimator for hiv prevalence based on the pooled testing strategy, we assume n individuals are randomly sampled from a large population with hiv prevalence p. Further, we assume that the hiv test is a perfect test, i.e. the sensitivity and specificity are 1 (we comment further on this assumption in the Conclusion).

The sample can be partitioned into three separate groups: 1) those who consent to testing for a disease, 2) those who only consent to unidentifiable pooled testing, and 3) those who refuse testing altogether. The prevalence in each of these three groups may differ. To estimate prevalence, we can collapse across these partitions. For a population with prevalence p,

where r i is the proportion of the population in testing consent group i and p i is the prevalence of hiv in group i. Individuals with i=1 consent to individual testing, with i=2 consent to pooled testing only, and with i=3 do not consent to test.

Note that we can never know p3, the prevalence in the non-consenters, and any estimator of p will always be biased unless everyone consents (r3=0); or we adjust the prevalence estimator based on some known and identifiable structure on p3, such as p3=p2. However, we can estimate the probability of having hiv given that one consents to test, denoted p T . Conditioning on the subset of the population who consents to some form of testing, we define q1 as the proportion of the population who consents to individual testing; and q2 as the proportion who consents to pooled testing. We estimate hiv prevalence in the testing consent group as p T =p1q1+p2q2.

We estimate q1,q2, and p1 using sample quantities from the data (e.g. q1 is the fraction of individuals who test individually, and p1 is the fraction of the individual testers who are hiv positive). Because of the desire to preserve anonymity, we cannot directly calculate the fraction of HIV positive individuals in the pooled testing population, p2. Rather, we observe the number of pools that test positive, denoted Z.

Among the pooled testers, we model Z using a binomial distribution, with sample size n p (the number of pools) and proportion of positive pools p z =1−(1−p2)k, where k is the number of samples per pool; intuitively, the expression for p z is equivalent to 1-P(all samples in a pool test negative). Inverting the formula for p z , it follows that p2=1−(1−p z )1/k. Define . We can estimate the prevalence in the pooled testing population as . This estimator is unbiased in large samples [13], and, for a fixed sample size, the variance of increases as the pool size k increases.

We estimate p T using the sample quantities from the data, ; we refer to as the combined prevalence estimator. Further, is asymptotically normally distributed with mean p T and variance:

where m is the total number of testing individuals in the sample. For more details, see Appendices 3-6 in Additional file 1. We can obtain a variance estimate for , , by plugging in the sample estimates into the above equation. Therefore, we can define a 100(1−α)% Wald-type confidence interval for as .

Comparing testing strategies with large sample sizes

To assess the properties of our combined testing strategy (assuming a perfect test), we consider relative low, moderate, and high population prevalence settings where individual testing consent rates are low. In the low prevalence setting, we assume the prevalence in the individual testers is 5% and the prevalence in the pooled testers is 10%; in the moderate setting, prevalence in individual testers is 15% and in pooled testers is 20%; and in the high prevalence setting, prevalence in the individual testers is 20% and in the pooled testers is 30%. These settings are important to keep in mind and are referenced throughout the paper as the low, moderate, and high prevalence settings. We assume that the sub-population that consents to individual testing constitutes 60% of the total testing population and the sub-population that will only contribute a sample for pooled testing constitutes 40% of the population.

We contrast the combined pooling and individual testing estimator with alternatives using asymptotic mean-squared error (mse), defined as the sum of the squared-bias and the variance of the estimator when sample sizes are large. It is important to balance both precision and accuracy when contrasting estimators, and we select mse because it incorporates bias and variance. Later, we address the scenario when sample sizes are not large and finite-sample bias can arise.

First, we contrast the combined estimator to the prevalence estimator resulting from only offering individual testing. If the pooled testing option is omitted and individual testing is the sole testing option, an estimate of the prevalence in the population is , the estimated hiv prevalence in the individual testing population. Assuming for now that r3=0, the bias in is p1−p=r2(p1−p2), which is non-zero when p1≠p2 and r2≠0. However, even if p1≈p2, the estimator using pooled samples will usually have a smaller variance than the estimator that does not incorporate pooled testing, as long as a sufficient proportion of the population consents to pooled testing.

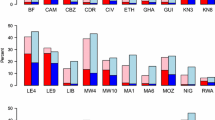

Since the combined estimator is asymptotically unbiased, the asymptotic mean-squared error of the estimator is identical to the variance of the estimator. The estimator using only individual testers has mse equal to the sum of the variance of and the square of the bias of the prevalence estimator when the pooled testers are excluded. The ratio of the mse using the pooled strategy versus the mse using individuals only is always less than one when the pool size is less than 7 for the low, moderate, and high prevalence settings (Figure 1), indicating that the combined estimator outperforms the estimator using only individuals. Indeed, in the situations in which pooled testers have a higher prevalence than individual testers, the mse ratio ranges between 0.1 and 0.4, and the combined estimator provides substantial improvement over the estimator ignoring pooled testers. Even when the prevalence is the same in the pooled and individual testing populations, the mse ratio ranges between 0.6 and 0.85, and the combined estimator still outperforms the individuals-only estimator.

Comparing the asymptotic properties of the combined estimator to the individuals-only estimator. Ratio of the asymptotic mse for the combined estimator to the ratio of the asymptotic mse for the estimator using only individuals in the low, moderate, and high prevalence settings for two scenarios: (a) pooled testers have a higher prevalence than individual testers, m=1000; (b) the prevalence in the pooled testers equals that in the individual testers (this ratio is independent of m). The combined estimator always has lower mse than the individuals only estimator in these settings.

On the other hand, only offering pooled testing to everyone in the sample, as suggested in [12], is cheaper than offering an individual and pooled testing option, because fewer tests are performed. For instance, we could design a study which only offers a pooled testing option and estimate prevalence using the maximum likelihood estimator for pooled samples discussed previously. The prevalence estimator resulting from pooling everyone is asympotitcally unbiased, because we include the entire testing population.

Testing using the combined estimator results in a smaller asymptotic mse than the estimator which only offers pooled testing (Figure 2), assuming the sample size is the same for both estimators. The mse for the combined estimator is 10% less than the mse for the pooled testing only estimator in the moderate and high prevalence settings, with less reduction in mse in the low prevalence setting. The combined estimator provides an improvement in mse because the variance of the pooled prevalence estimator always decreases as the pool size decreases; intuitively, individual test results provide more information than pooled test results on the same number of people, so providing an individual testing option is optimal. Further, if everyone is offered pooled testing, individual results are no longer available to those who are interested in learning their hiv status and thus may be unethical [30]. And lastly, the survey protocol we suggest gives individuals two opportunities to consent to testing (pooled or individual), rather than only asking individuals to test once as in the pooled-testing only design, which could help increase consent rates. Therefore, having both pooled and individual testing options is advantageous.

Comparing the asymptotic properties of the combined estimator to the pooling-only estimator. Ratio of the mse for the combined estimator to the ratio of the mse when everyone is offered pooled testing, as a function of pool size for the low, moderate, and high prevalence settings when pooled testers have a higher prevalence than individual testers. The combined estimator always has lower mse than the estimator where everyone is offered pooled testing in these settings.

Assessing the finite sample properties of the combined estimator

Pooling has its limitations that are a function of prevalence. When the prevalence is high, then, to be informative, the pools must be so small as not to have all the pools test positive [13, 31]. [18] investigate the properties of pooled estimators in high prevalence settings. On the other hand, to retain anonymity, the pool sizes cannot be too small. Statistically, pooled estimators are potentially unstable when the prevalence in the pooled-sample population (p2) is high or when the number of individuals consenting to pooled testing is small.

In the case of most diseases that are not extremely rare, such as hiv, the disease prevalence is typically high enough that some pools will test positive, and we are not concerned with zero pools testing positive. However, in moderate to high prevalence settings, the probability that all pools will test positive must also be addressed. This probability is P(Z=n p )=(1−(1−p2)k)n p , which decreases as n p increases and/or k and p2 decrease. Therefore, choosing a sufficiently small pool size k and obtaining a sufficiently large number of pools n p are necessary to ensure that the estimate of the population prevalence in the pooled testing group is reasonable. Note that the lower bound for k is determined by how large the pools should be to assuage concerns about identifiability of test results.

Pooled prevalence estimators are biased in finite samples [13], and consequently, is only asymptotically unbiased (see Appendix 5 in Additional file 1). While replacing an estimator with a jackknifed version of the estimator typically reduces finite sample bias [32–34], in simulation, we find that the jackknife estimator provides little improvement over the original estimator (results not shown). Other suggestions for bias correction to the pooled prevalence estimator have been suggested [35]. For instance, in high prevalence settings, [18] propose a double grouping estimator.

Burrows [31] suggests the simple estimator (subsequently referred to as the Burrows estimator):

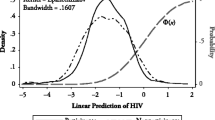

We can use the Burrows estimator to define a new prevalence estimator , which is constructed by substituting for in the combined estimator. This new estimator has much smaller finite sample bias than in small samples. In Figure 3, we plot the percent bias in the prevalence estimator for and for pool size k=7 (the size for which we see the greatest finite-sample bias). The original estimator always overestimates the prevalence, with the severity of the bias decreasing as the sample size increases. The Burrows estimator has negligible bias, even for sample sizes as small as 100. Consequently, we recommend using in practice rather than .

Percent bias in the combined estimator. Percent bias in the MLE estimator (thin lines) and the Burrows estimator (bold lines) for pool size k=7 as a function of sample size for low, moderate, and high prevalence settings. Using the Burrows estimator results in a substantial reduction in finite sample bias.

In a simulation study, we evaluate maximum pool sizes and minimum number of pools such that the bias and standard error of are small and the 95% Wald confidence interval coverage of is near 0.95. Individuals who do not consent to testing at all are ignored throughout the simulations. Simulation parameters are chosen to reflect low, moderate, and high prevalence settings which have low testing consent rates for individuals, as described previously. We perform the simulation study for pool sizes 3, 5, and 7 (with 5,000 iterations each). Wald 95% confidence interval coverage is shown in Figure 4 for the low and high prevalence settings (the moderate setting was similar, but results are not shown). The 95% Wald confidence interval performs well for the combined estimator, with coverage lingering around 95% for moderate sample sizes. The confidence interval coverage drops below 60% very quickly when the pooled testers are ignored. As in the Lilongwe example, confidence intervals are misleading when selection bias exists in the sample.

In small sample sizes for the moderate and high prevalence settings, the empirical standard error for the combined estimator is much larger than the derived standard error (results not shown), due to the fact that all of the pools test positive in a substantial proportion of the simulation runs. The derived large-sample standard error is not valid when all pools test positive, and, in such settings, using the pooled prevalence estimator in practice is not advised. Further, finite sample bias is problematic in small sample sizes when prevalence is moderate to high. Before using the asymptotic normality and variance formula for the combined estimator, it is important to know how many pooled testers are required for these asymptotics to be valid. In order to assess when the large-sample asymptotics hold and the combined prevalence estimator is valid, we calculate the ratio of the empirical mse and the asymptotic mse (see Figure 5 for the low and high prevalence settings). The asymptotic mse is described above, and the empirical mse is defined as the square of the average empirical bias in the combined estimator added to the empirical variance of the combined estimator in the 5000 simulations. Since both empirical variance and bias should be higher than the asymptotic variance and bias in finite samples, this ratio should provide a good metric for gauging the validity of our estimator. When this ratio is less than 1.05, we declare the estimator to be valid.

Table 1 provides suggestions as to minimum sample size and pool size required in the low, moderate, and high prevalence settings in order to obtain a valid estimator. We recommend not using pool sizes over 5 (preferably 3) in the high prevalence setting.

Conclusion

When investigators designing a disease prevalence survey anticipate high refusal rates for individual testing due to disease stigma, offering a pooled testing option and combining pooled and individual sample results has the potential to improve prevalence estimates. Ideally, everyone sampled will consent to either individual or pooled testing. In practice, we anticipate that some individuals will refuse to participate. In Appendix 1 in Additional file 1, we propose several potential adjustments to the prevalence estimator to account for the refusers. We note that the prevalence in the group that refuses to test altogether cannot be estimated without making strong modeling assumptions.

The proposed testing strategy addresses non-response rates in high prevalence, high non-response scenarios. In lower prevalence settings, pooled testing becomes more efficient and higher values of k are acceptable. In settings with high response rates, the combined pooled and individual testing strategy is not recommended, as the additional logistics and cost of implementation would not outweigh the small increase in the accuracy of the prevalence estimates.

We have assumed that, for a given survey, the pool size k does not vary. In high prevalence scenarios where it is possible that all pools might test positive, allowing k to vary could substantially improve the pooled estimator. For instance, [17] partition individuals by risk when forming groups. The form of the estimator for the prevalence among pooled testers would change in this setting and would no longer have a clean form.

Acquiring blood samples for pooled testing also allows the investigator to compare the prevalence in the individual testing population (p1) with the prevalence in the pooled testing population (p2). A test of the hypothesis that p1=p2 is simple to construct. This hypothesis test and a corresponding 95% CI for (p1−p2) can help determine the extent of selection bias in the sample. Evidence that the consenting and part of the refusing populations are not different with respect to disease status is valuable for generalizability of results to the entire population. Note that this is an association test which does not take any covariates into account, though the test could be conducted within strata if sample sizes are large enough.

Techniques have also been developed for regression analyses of disease status on covariates when blood samples are pooled [36–39]. Future work should investigate extending this testing strategy to facilitate regression modeling with the individual and pooled test results. Though we do not want to identify individuals within pooled samples, constructing pools that are homogeneous with respect to the covariates of interest increases the precision of the regression coefficient estimates [36]. Non-random missingness in covariates would likely pose an additional complication in designing a testing strategy to facilitate regression modeling.

Our proposed estimator above assumes a perfect test, but extending the estimator to imperfect tests is straightforward, as shown in [13], insofar as sensitivity and specificity do not vary with pool size. Sensitivity and specificity are generally high for hiv tests. However, if sensitivity and specificity are not close to 1, the merits of this testing strategy should be re-evaluated; imperfect tests can compromise the applicability of pooled testing in high prevalence scenarios [18]. Details about how to extend the estimator for imperfect tests are included in Appendix 2 in Additional file 1. Additionally, if sensitivity and specificity are a function of the pool size, the pooled test is subject to the ’dilution effect,’ substantially complicating prevalence estimation [40]. Future work should investigate extending this testing strategy to account for the dilution effect.

Many testing protocols are currently being used in hiv surveillance programs which aim to optimize efficiency and retain anonymity. There exists an ongoing debate about the ethics of unlinked anonymous testing (uat) [30, 41, 42]. In sentinel populations such as pregnant women at anc clinics, uat without informed consent is a commonly used protocol. Blood samples that are obtained for routine tests are also tested for hiv without any informed consent and are not linked back to the individual in any way. As treatment becomes more available, the ethics of such testing procedures become more questionable, and our suggested protocol requires obtaining informed consent from the individual. Voluntary uat (or uat with informed consent) is a much more widely accepted testing protocol and is currently used in dhs surveys. Informed consent is obtained before testing blood for hiv, but test results are not linked back to the individuals and, those who test cannot learn their disease status. Our testing protocol bypasses any of the ethical issues associated with uat, as sampled individuals have three options: 1) test as an individual and learn their disease status, 2) test as an individual and do not learn their disease status, or 3) submit blood for pooled testing and do not learn their disease status.

Lastly, in selecting survey design parameters, namely pool size and total sample size, an a priori estimate of r2 is necessary. This proportion can be estimated by conducting a small pilot study in the population before the survey is conducted. In constructing our estimators, we assume the data were generated from a simple random sample. The methodology can be extended to stratified or cluster sampling surveys, insofar as pools are composed within the strata and a sufficient proportion of the sample consents to pooled testing within each stratum.

References

Martin-Herz S, Shetty A, Bassett M, Ley C, Mhazo M, Moyo S, Herz A, Katzenstein D: Perceived risks and benefits of HIV testing, and predictors of acceptance of HIV counselling and testing among pregnant women in Zimbabwe. Int J Sex Transm Dis AIDS. 2006, 17: 835-841.

Garcia-Calleja J, Gouws E, Ghys P: National population based HIV, prevalence surveys in sub-Saharan Africa: results and implications for HIV and AIDS estimates. Sex Transm Infect. 2006, 82: iii64-iii70. 10.1136/sti.2006.019901

Mishra V, Barrere B, Hong R, Khan S: Evaluation of bias in HIV seroprevalence estimates from national household surveys. Sex Transm Infect. 2008, 84 (Suppl I): i63-i70.

Gouws E, Mishra V, Fowler T: Comparison of adult HIV prevalence from national population-based surveys and antenatal clinic surveillance in countries with generalised epidemics: implications for calibrating surveillance data. Sex Transm Infect. 2008, 84: i17-i23. 10.1136/sti.2008.030452

Castro A, Farmer P: Understanding and addressing AIDS-related stigma: from anthropological theory to clinical practice in Haiti. Am J Pub Health. 2005, 95: 53-59. 10.2105/AJPH.2003.028563

Vermund SH, Wilson CM: Barriers to HIV testing-where next?. Lancet. 2002, 360: 1186-1187. 10.1016/S0140-6736(02)11291-8

Obermeyer C, Osborn M: The Utilization of Testing and Counseling for HIV: A Review of the Social and Behavioral Evidence. Am J Pub Health. 2007, 97 (10): 1-13.

Reniers G, Eaton J: Refusal bias in HIV prevalence estimates from nationally representative seroprevalence surveys. AIDS. 2009, 23 (5): 621-629. 10.1097/QAD.0b013e3283269e13

Reniers G, Araya T, Berhane Y, Davey G, Sanders E: Implications of the HIV testing protocol for refusal bias in seroprevalence surveys. BMC Pub Health. 2009, 9: 163-172. 10.1186/1471-2458-9-163.

Colfax G, Bindman A: Health benefits and risks of reporting HIV-infected individuals by name. Am J Pub Health. 1998, 88 (6): 876-879. 10.2105/AJPH.88.6.876.

Woods W, Dilley J, Lihatsh T, Sabatino J, Adler B, Rinaldi J: Name-based reporting of HIV-positive test results as a deterrent to testing. Am J Pub Health. 1999, 89 (7): 1097-1100. 10.2105/AJPH.89.7.1097.

Gastwirth J, Hammick P: Estimation of the prevalence of a rare disease, preserving the anonymity of the subjects by group testing: Application to estimating the prevalence of AIDS antibodies in blood donors. J Stat Plann Inference. 1989, 22: 15-27. 10.1016/0378-3758(89)90061-X.

Tu X, Litvak E, Pagano M: On the informativeness and accuracy of pooled testing in estimating prevalence of a rare disease: Application to HIV screening. Biometrika. 1995, 82: 287-297. 10.1093/biomet/82.2.287.

Litvak E, Tu X, Pagano M: Screening for the presence of a disease by pooling sera samples. J Am Stat Assoc. 1994, 89: 424-434. 10.1080/01621459.1994.10476764.

Quinn T, Thomas C, Brookmeyer R, Kline R, Shepherd M, Paranjape R, Mehendale S, Gadkari D, Bollinger R: Feasibility of pooling sera for HIV-1 viral RNA to diagnose acute primary HIV-1 infection and estimate HIV incidence. AIDS. 2000, 14 (17): 2751-2757. 10.1097/00002030-200012010-00015

Bilder C, Tebbs J, Chen P: Informative retesting. J Am Stat Assoc. 2010, 105 (491): 942-955. 10.1198/jasa.2010.ap09231

McMahan C, Tebbs J, Bilder C: Informative Dorfman screening. Biometrics. 2011, 68 (1): 287-296.

Hammick P, Gastwirth J: Group testing for sensitive characteristics: extension to higher prevalence levels. Int Stat Rev/Revue Internationale de Statistique. 1994, 62 (3): 319-331. 10.2307/1403764.

Shisana O, Simbayi L, Parker W, Zuma K, Bhana A, Connolly C, Jooste S, Pillay V: South African HIV Prevalence, HIV Incidence, Behaviour and Communication Study. Cape Town: HSRC Press; 2005.

Tanser F, Hosegood V, Bärnighausen T, Herbst K, Nyirenda M, Muhwava W, Newell C, Viljoen J, Mutevedzi T, Newell M: Cohort Profile: Africa Centre Demographic Information System (ACDIS) and population-based HIV survey. Int J Epidemiol. 2008, 37: 956-962. 10.1093/ije/dym211

Little R, Rubin D: Statistical Analysis with Missing Data. New York: Wiley and Sons; 2002.

Lohr S: Sampling: Design and Analysis. Pacific Grove: Brooks/Cole; 1999.

Tourangeau R, Yan T: Sensitive Questions in Surveys. Psychol Bull. 2007, 133 (5): 859-883.

Curtis S, Sutherland E: Measuring sexual behaviour in the era of HIV/AIDS: the experience of Demographic and Health Surveys and similar enquiries. Sex Transm Infect. 2004, 80 (Suppl II): ii22-ii27.

de Walque D: Sero-Discordant Couples in Five African Countries: Implications for Prevention Strategies. Popul Dev Rev. 2007, 33 (3): 501-523. 10.1111/j.1728-4457.2007.00182.x.

Bärnighausen T, Bor J, Wandira-Kazibwe S, Canning D: Correcting HIV prevalence estimates for survey nonparticipation using Heckman-type selection models. Epidemiology. 2011, 22: 27. 10.1097/EDE.0b013e3181ffa201

National Statistical Office and ORC Macro: Malawi Demographic and Health Survey 2004. Calverton: National Statistical Office (Malawi) and ORC Macro; 2005.

Cox L, Zayatz L: An agenda for research on statistical disclosure limitation. J Official Stat. 1995, 11: 205-20.

Federal Committee on Statistical Methodology: Statistical Policy Working Paper 22: Report on Statistical Disclosure Limitation Methodology. Washington: U.S. Office of Management and Budget; 1994.

Diaz T, De Cock K, Brown T, Ghys P, Boerma J: New strategies for HIV surveillance in resource-constrained settings: an overview. AIDS. 2005, 16 (Suppl 2): S1-S8.

Burrows P: Improved Estimation of Pathogen Transmission Rates by Group Testing. Phytopathology. 1987, 77: 363-365. 10.1094/Phyto-77-363.

Quenouille M: Notes on bias in estimation. Biometrika. 1956, 43: 353-360.

Miller R: The Jackknife-A review. Biometrika. 1974, 61: 1-15.

Shao J, Tu D: The Jackknife and Bootstrap. New York: Springer; 1995.

Hepworth G, Watson R: Debiased estimation of proportions in group testing. J R Soc Stat (Series C). 2009, 58: 105-121. 10.1111/j.1467-9876.2008.00639.x.

Vansteelandt S, Goetghebeur E, Verstraeten T: Regression models for disease prevalence with diagnostic tests on pools of serum samples. Biometrics. 2000, 54 (4): 1126-1133.

Xie M: Regression analysis of group testing samples. Stat Med. 2001, 20: 1957-1969. 10.1002/sim.817

Bilder C, Tebbs J: Bias, efficiency, and agreement for group-testing regression models. J Stat Comput Simul. 2009, 79: 67-80. 10.1080/00949650701608990

Chen P, Tebbs J, Bilder C: Group testing regression models with fixed and random effects. Biometrics. 2009, 65 (4): 1270-1278. 10.1111/j.1541-0420.2008.01183.x

Hung M, Swallow W: Robustness of group testing in the estimation of proportions. Biometrics. 1999, 55: 231-237. 10.1111/j.0006-341X.1999.00231.x

Krishnan S, Jesani A: Unlinked anonymous HIV testing in population-based surveys in India. Indian J Med Ethics. 2009, 6 (4): 182-184.

Kessel A, Datta J, Wellings K, Perman S: The ethics of unlinked anonymous testing of blood: views from in-depth interviews with key informants in four countries. BMJ Open. 2012, 2 (6). doi:10.1136/bmjopen-2012-001427.

Acknowledgements

The authors thank three anonymous reviewers for helpful comments on the manuscript. LH received funding from NIH T32 AI007358. MP received funding from NIH Grant R01 AI097015 and NIH Grant AI083036-03.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

Both authors declare that they have no competing interests.

Authors’ contributions

LH performed statistical simulations and derivations. MP supervised simulation and derivations. Both authors wrote and reviewed the final manuscript.

Electronic supplementary material

12982_2012_108_MOESM1_ESM.pdf

Additional file 1: Appendix for “Estimating HIV prevalence from surveys with low individual consent rates: annealing individual and pooled samples”.(PDF 209 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Hund, L., Pagano, M. Estimating HIV prevalence from surveys with low individual consent rates: annealing individual and pooled samples. Emerg Themes Epidemiol 10, 2 (2013). https://doi.org/10.1186/1742-7622-10-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1742-7622-10-2